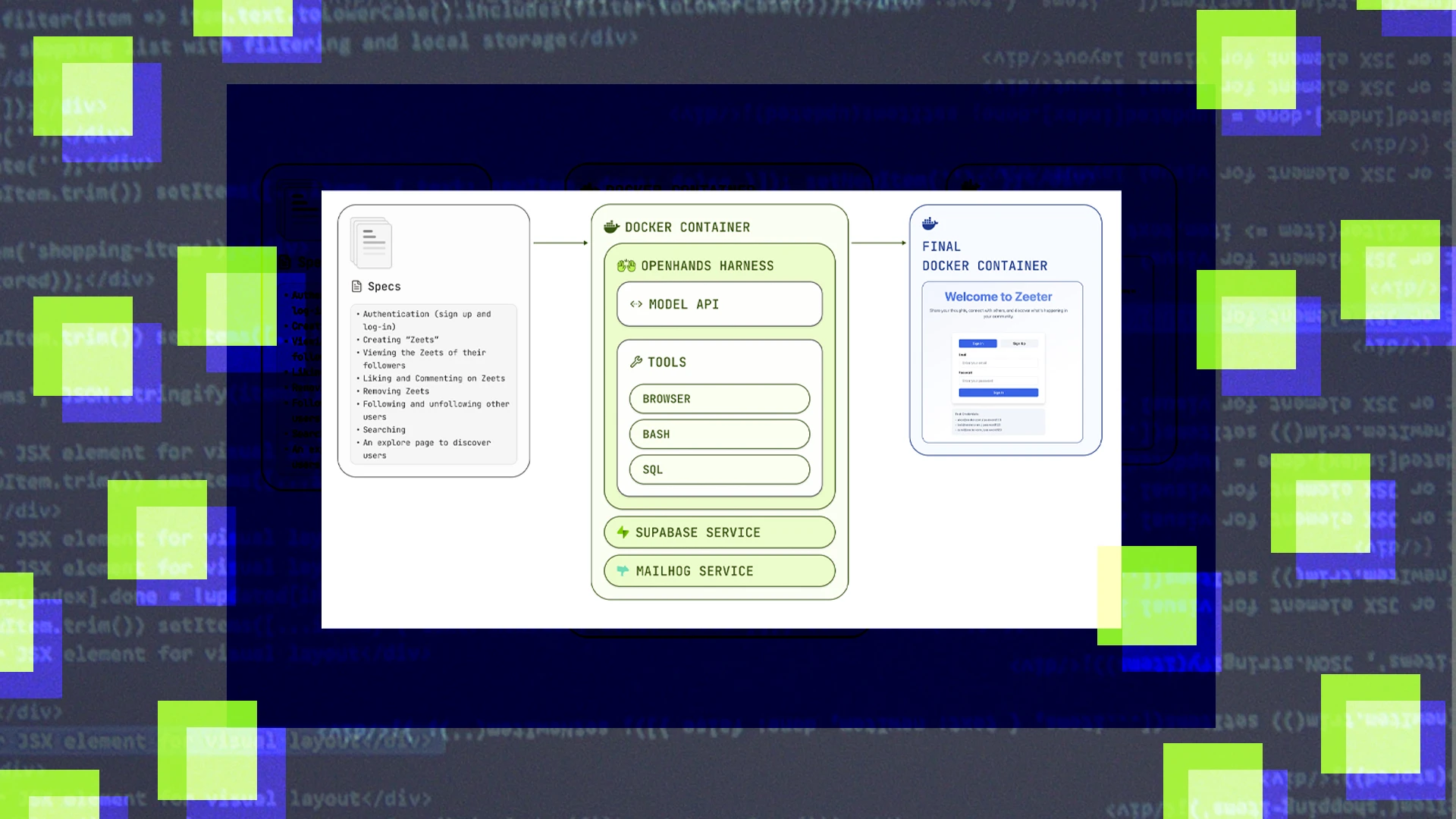

We’re releasing Vibe Code Bench v1.1.

This update makes several improvements to both the data and the evaluator for Vibe Code Bench.

The v1.1 update improves data quality by standardizing authentication modes, using explicit pre-seeded emails, and clarifying instructions (on a small subset of tests).

On the evaluation side, the update enhances the browser-use evaluator with new tools for interacting with complex HTML elements and better handling for “waiting”, as well as providing it with an arsenal of files (image and others) to use during testing.