Poker Agent was born out of curiosity from the Vals AI team to explore how models perform in strategic, competitive environments—an interesting complement to traditional benchmarks, though not a substitute for evaluation on real-world professional tasks. This collaborative experiment with Graphite Digital is captured at a point in time and may be updated less frequently than our core industry benchmarks.

Takeaways

- GPT 5.2 ranks first overall, representing a recovery in performance after GPT 5.1, which placed 7 positions lower than GPT 5. However, the model is 10× the cost and 20× the latency of top-performing alternatives like Gemini 3 Flash (12/25) (which places third).

- DeepSeek V3.2 (Thinking) outperforms many closed-sourced competitors, achieving an impressive TrueSkill rating of 1090.3 while maintaining exceptional cost efficiency at $0.0135 per hand.

- Models exhibited significantly varied playing styles: Grok 4.1 Fast (Reasoning) played most aggressively (57.2% aggressive post-flop actions/game), while the Gemini series (Gemini 3 Pro (11/25), Gemini 3 Flash (12/25) ) and DeepSeek V3.1 adopted more conservative strategies.

- The benchmark reveals that strategic decision-making under uncertainty remains challenging with no individual model dominant, highlighting the difficulty of generalizing language skills to competitive game environments.

Partners in Evaluation

Background

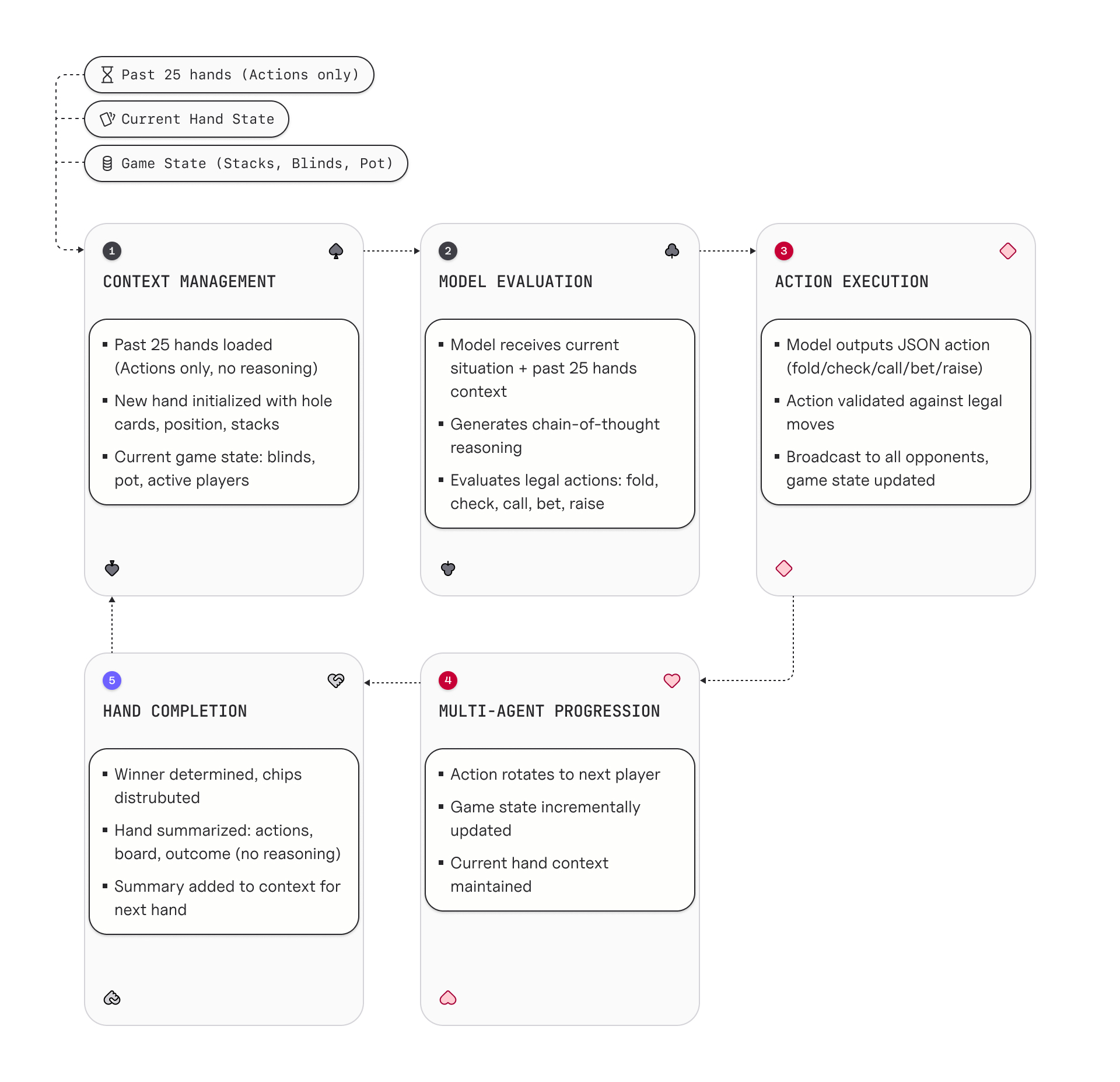

As large language models continuously advance, understanding their reasoning capabilities in different environments is increasingly important. Conventional benchmarks normally focus on knowledge retrieval, mathematical problem-solving, and coding ability, however these metrics often lack the complex decision-making skills required in competitive and strategic environments. Poker (No-limit Texas Hold’em) offers a unique environment that bridges this gap by testing probabilistic reasoning under uncertainty, multi-step strategic planning, and adaptive decision-making in competitive environments.

By benchmarking models in a poker environment, we gain insight into capabilities that are difficult to assess through traditional methods:

- Generalization: Can models trained on language tasks generalize to strategic game-playing with long-horizon strategy and planning?

- Test Time Adaptation: How do models adapt their decision making across hands given past context on the behavior of other models?

- Multi-Turn Reasoning: How do models reason about the game in a multi-turn environment with exploding context lengths of up to 100k+ tokens?

Poker offers a transparent and difficult-to-game evaluation framework. While proprietary benchmarks may advantage certain models through training data contamination, poker places models in a dynamic environment where high-level reasoning is necessary. In turn, we have created a multi-agent benchmark in collaboration with Graphite Digital where 17 frontier models play each other in 20,000 hands of poker in a 10 seat no-limit Texas Hold’em environment.

Results

The Pareto cost-curve above illustrates the relationship between model accuracy and cost per hand across different models. We note that despite GPT 5.2 achieving the highest overall rating (1131.8), it is significantly more expensive than Gemini 3 Flash (12/25) and Grok 4.1 Fast (Reasoning) despite only marginally better performance.

Gameplay Style Analysis

The charts above summarize how models played. We report several behavioral metrics:

- % Aggressive Actions: The share of postflop decisions that are bets/raises (vs. checks/calls). This captures how often a model applies pressure and builds pots.

- % Hands Played: How often a model voluntarily continues a hand (i.e., doesn’t fold preflop). Higher values usually mean looser preflop selection and more variance.

- % Folded After Investment: Fold rate after a model has already put chips in. Higher values can mean discipline or may indicate carelessness when the model enters then gives up.

- % Won At Showdown: Win rate when reaching showdown (the final stage). Higher values usually imply stronger ranges, while lower implies thinner calls/bluffs.

- Avg Bet Size (%): Average postflop bet size relative to the pot.

We don’t see a single “dominant” style—models with very different profiles can place well. For instance, GPT 5.2 plays relatively many hands with steady aggression and the best showdown win rate, while DeepSeek V3.2 (Thinking) is more selective but highly aggressive with one of the largest average bet sizes. In contrast, Gemini 3 Flash (12/25) succeeds with a much tighter, lower-aggression style. Weak results come from extreme loose-passive play. For instance Llama 4 Maverick plays many hands with very low aggression, high fold-after-invest, and a very low showdown win rate.

Case Study: Chip Counts Over Time

We have selected a few example games to showcase different models betting behavior. We note how fairly substantial early leads do not always translate to strong overall placement.

Case Study: Strategic Decision-Making with Pocket Aces

Below, we show an example of how different models played the same premium starting hand (pocket Aces) in different game instances. This hand demonstrates the significant variance in strategic approaches and outcomes, with chip results ranging from -72 to +467 chips depending on each model’s decision-making process.

Q

Hand 8, Seat 9 (seed 5042)

Hole Cards: Ah As (Pocket Aces)

Final Board: 3s 8h Th 3c Ks

Button Seat: 6

Variance in Outcomes: -72 to +467 chips (539 chip spread)

Outcome: +467 chips ($209 → $676)

**PREFLOP:**

*Raise to 10:*

I have pocket aces (Ah, As), the strongest possible starting hand in Texas Hold'em. Raising is mandatory to build the pot and gain information. A raise to 10 (4x the big blind) is standard preflop with premium hands — it's large enough to thin the field and extract value, but not so large that it scares off all opponents. Given my deep stack (209) and position (UTG+1, early), I want to establish strength early.

*Raise to 60:*

I still hold pocket aces (Ah, As), the strongest starting hand. XAI-Grok-4 has raised to 30, showing aggression, and three others have called, creating a multiway pot. The minimum raise is 60. Raising here isolates the initial aggressor, discourages speculative calls from weaker hands, and builds the pot with my premium holding. A raise to the minimum (60) keeps me committed but doesn't overcommit — I retain 149 chips behind for postflop play.

*Call 120:*

Llama4-Maverick has just raised to 120, putting me to a decision with 60 already invested. The minimum raise would be 240, but I only have 209 — meaning an all-in raise is my only raising option. However, given that three players are still in, this is likely a multiway pot against strong ranges. Calling keeps me in the hand without overcommitting preflop; I retain 149 chips for postflop play where I can realize my equity. Calling is the balanced, highest-EV choice here.

**FLOP: 3s 8h Th**

*Bet 150:*

I hold pocket aces on a relatively dry flop of [3s, 8h, Th]. This board has no obvious straight or flush draws and is unlikely to have connected strongly with my opponents' ranges. Betting here builds the pot with my premium hand, charges potential draws, and puts pressure on hands that may have flopped second-best. A bet of 150 (about 1/3 of my stack) is large enough to extract value and commit my opponents, while leaving me with 59 chips behind for the turn if needed.

+467

Most profitable line - demonstrated excellent value extraction by building the pot preflop through multiple raises, then continuing aggression on a favorable flop. The call of the large 3-bet kept the pot manageable while preserving stack depth for postflop play.

Methodology

Poker Environment

All experiments use a fixed-structure no-limit Texas Hold’em cash-game setup with ten players at the table. Each game consists of 100 hands played with standard blind rotation and stack carry-over between hands. At the start of every game, each player receives 100 big blinds (200 small blinds), and there is no dedicated time bank. There is no rake, no rebuys, and once a player loses all chips they remain out of action for the rest of that game.

We employ the TrueSkill rating system, developed by Microsoft, to evaluate poker model performance across multi-player tournaments. TrueSkill naturally handles the rankings of 10-player poker matches by modeling each player’s skill as a Gaussian distribution with mean (μ) and uncertainty (σ).

Models are ranked based on chip counts and elimination order across all games, with ratings continuously updated using Bayesian inference to account for confidence. We normalize the TrueSkill ratings to a 1000-baseline system for easier interpretation against traditional Elo ratings (the raw TrueSkill rating was multiplied by 40).

The conservative rating (μ - σ) provides a lower bound estimate that accounts for uncertainty, particularly useful when comparing models with varied nunbers of ganes played.

Variance Reduction

Poker outcomes over a 100-hand game are extremely noisy. The model that finished first in one seating was eliminated early in another, and with five different seeds under a single seating, the same model’s final chip counts swung widely from seed to seed.

We established our baseline through a round-robin tournament where ten frontier models played 10,000 hands against each other. This initial evaluation used a 10 seeds × 10 seatings design to control for variance. A seed represents fixed stochastic elements of the game (shuffles, runouts, etc.), creating reproducible scenarios, while a seating assigns players to specific table positions. The 10 game seeds provide a greater diversity of scenarios, while the 10 randomized seatings per seed balance positional advantages.

To rate new models, we created a matchmaking algorithm to maximize information gain. New models start from a default TrueSkill prior (μ = 25.0, σ = 8.333). For each baseline anchor candidate we compute an information score against every new model:

info_score(a, n) = (σa + σn) × exp(-|μa - μn| / 30)

We sum each anchor’s scores across all new models and draw anchors via weighted random choice from the top 20 candidates.

We evaluate each new model across at least 50 games. 20% of seeds are reused from existing games, where selected new models swap out with existing models for a direct comparison where they are given the same hands and boards.

Full code for Poker Bench will be released soon on Github.

Acknowledgements

This benchmark was developed in collaboration with Andy Xu, Felix Peng, Diego Agustin, and Grace Murray from Graphite Digital. Special thanks to the PokerKit team for providing the underlying poker game engine.

Please cite our work using the following:

Citation (BibTeX)

@misc{xu2025pokeragent,

title = {Poker Agent Bench: Can LLMs Strategize in Competitive Multi-Agent Environments?},

author = {Xu, Andy and Peng, Felix and Agustin, Diego and Murray, Grace and Nashold, Langston},

year = {2025},

month = dec,

howpublished = {Vals AI},

url = {https://vals.ai/benchmarks/poker_agent},

note = {Accessed: 2025-12-23}

}